Coursera ML(5)-Logistic Regression and Regularization with Python

线性回归算法,可用于房屋价格的估计及股票市场分析。 Logistic Regression (逻辑回归)是当前业界比较常用的机器学习方法,用于估计某种事物的可能性。比如某用户购买某商品的可能性,某病人患有某种疾病的可能性,以及某广告被用户点击的可能性等。相关公式推导在这里

Stanford coursera Andrew Ng 机器学习课程编程作业(Exercise 2),作业下载链接貌似被墙了,下载链接放这。http://home.ustc.edu.cn/~mmmwhy/machine-learning-ex2.zip

预备知识

这里应该分为 正常、过拟合和欠拟合,三种情况。

- Cost Function

Gradient Descent

Grad

后边有一个$\frac{\lambda}{2m}\sum_{j=1}^n \theta_j^2$和$\frac{\lambda}{m}\theta_j$小尾巴,作用就是进行 Regularization,防止拟合过度。

Logistic Regression

题目介绍

- you will build a logistic regression model to predict whether a student gets admitted into a university.(根据各科目分数预测该学生是否能录取)

- For each training example, you have the applicant’s scores on two exams and the admissions decision.

- Your task is to build a classi cation model that estimates an applicant’s probability of admission based the scores from those two exams.

python code

1 | from numpy import * |

运行结果

最后进行了一个测试,如果一个学生两门考试成绩,一门45分,另外一门85分,那么他被录取的概率为77%。幸亏是在外国,在中国这分数,连大专都考不上。

Logistic Regression and Regularization

题目

- Suppose you are the product manager of the factory and you have the test results for some microchips on two di erent tests.

- 对于一批产品,有两个检测环节,通过检测结果判断产品是否合格。比如,宜家会有三十年床垫保证,那么如果确保床垫合格(用30年),我们只能通过一些检测,来推测产品是否合格。

python code

1 | from numpy import * |

运算结果

- 过拟合

lambda=0。不考虑$\frac{\lambda}{2m}\sum_{j=1}^n \theta_j^2$和$\frac{\lambda}{m}\theta_j$,我们可以看到图像已经被拟合过度。这样的答案没有通用性

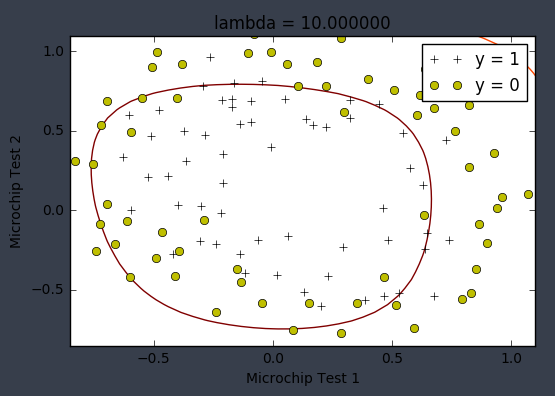

- 欠拟合

lambda=10,欠拟合会导致数据的很多细节被抛弃。

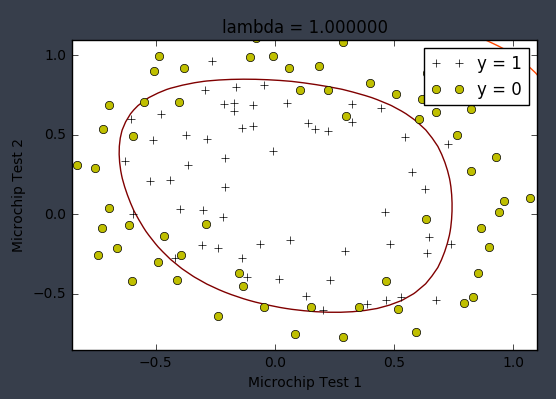

- 拟合较好

lambda=1,准确性到91%左右,这个准确率算低的了吧,还有很大上升空间。

Summary

熊辉上课的时候,说机器学习需要调参数,参数很不好调,需要使用者对数据有极高的敏感度。

参数lambda就是这种感觉,感觉真的是乱调一通,然后就发现,诶哟,好像还不错。

参考链接:

scipy.optimize.minimize

Logistic regression

Machine Learning Exercises In Python, Part 3

machine-learning-with-python-logistic

以上

Coursera ML(5)-Logistic Regression and Regularization with Python