Coursera ML(3)-Multivariate Linear Regression python实现

Multivariate Linear Regression and Programming Exercise 1

Gradient Descent for Multiple Variables

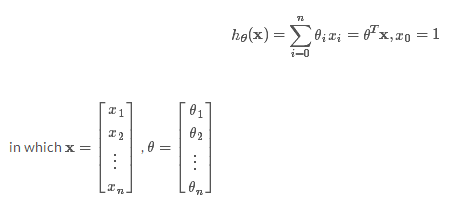

- Suppose we have n variables, set hypothesis to be:

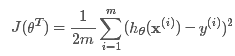

Cost Function

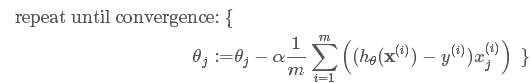

Gradient Descent Algorithm

Get every feature into approximately [-1, 1]. Just normalize all the parameters :)Learning Rate:Not too big(fail to converge), not too small(too slow)

- Polynormal Regression:Use feature scalling. (Somewhat like normalizing dimension)

Programming Exercise 1

下载程序及相关数据

Stanford coursera Andrew Ng 机器学习课程编程作业(Exercise 1),作业下载链接貌似被墙了,下载链接放这。

http://home.ustc.edu.cn/~mmmwhy/machine-learning-ex1.zip

重新推导一下:

其实这里一共就两个式子:

- computeCost

gradientDescent

python拟合实现代码

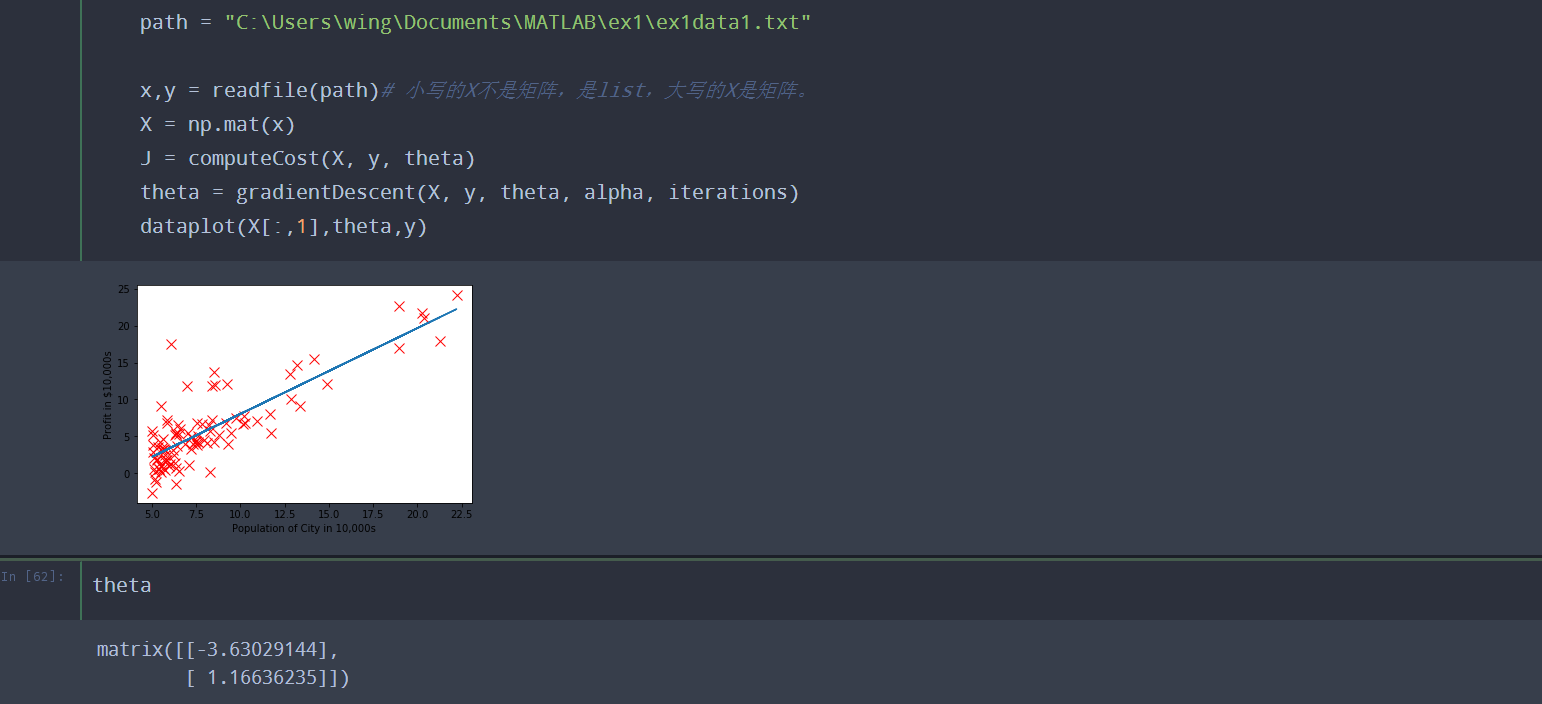

原本用的是matlab代码,我用python实现了一下,结果是一样的:

1 | import numpy as np |

输出的图有点小,就这样吧。

Coursera ML(3)-Multivariate Linear Regression python实现